Important Trends: Generative AI, Open Source & Data-Driven BI

Last week I spilled coffee on my notebook right as I was jotting down “another model release.” The ink bled into a perfect little Rorschach test—and honestly, that’s what the AI news cycle has felt like lately: a blur unless you squint for patterns. So I did the squinting. Instead of chasing every shiny generative AI demo, I’m tracking the quieter shifts that actually change my day-to-day work: data quality finally getting its moment, smaller models behaving like grown-ups on edge devices, open source maturing (with rules), and a surprising comeback story—physical AI and robotics. This is my running “AI updates” log for what’s next in 2026, with a few personal asides and a couple of wild hypotheticals to keep it real.

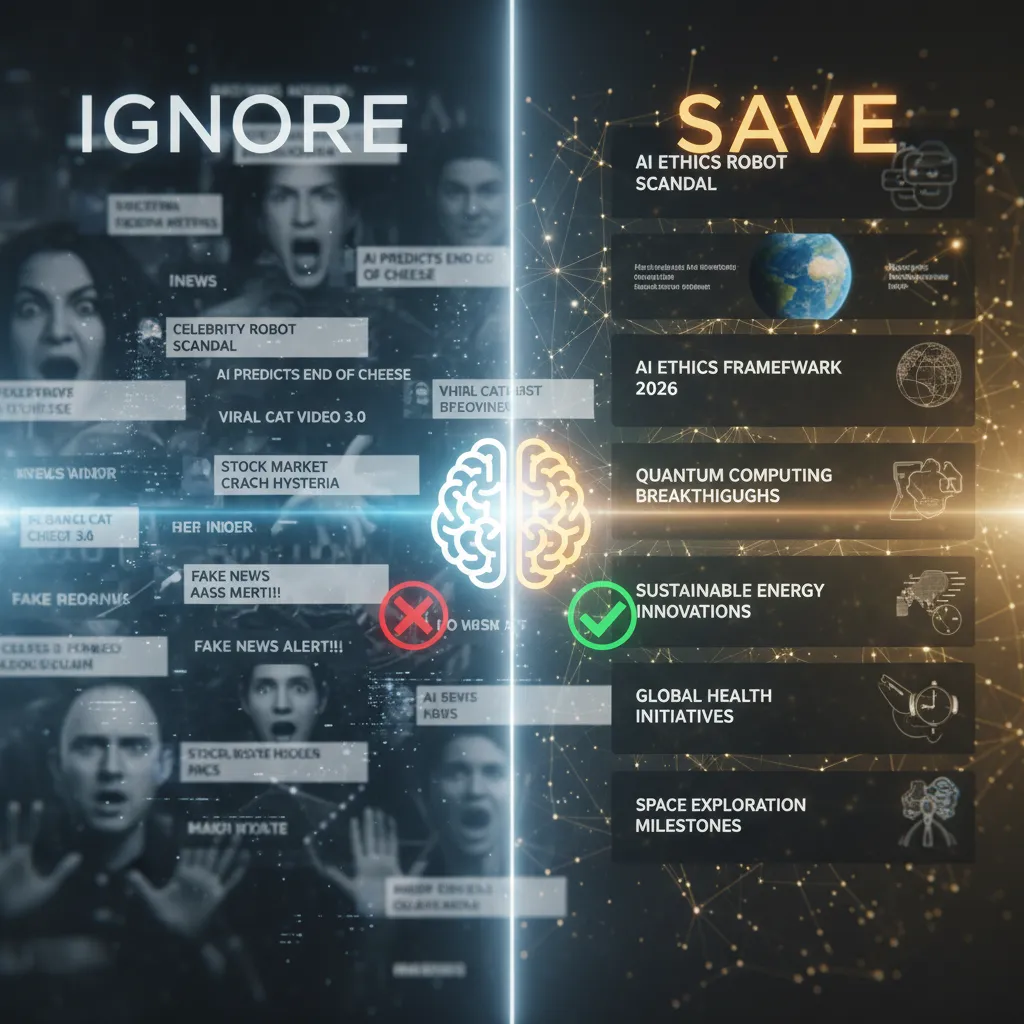

1) My 2026 AI news filter: what I ignore vs. what I save

When I scan Data Science AI News updates, I’ve changed what I treat as “signal.” I’ve stopped bookmarking “largest model” headlines and started saving anything that mentions data quality, real time, or inference workloads. Bigger models are interesting, but most teams I work with don’t fail because the model is too small—they fail because the data is late, messy, or hard to govern.

What I ignore (most days)

- Leaderboard wins with no notes on deployment, monitoring, or cost

- Flashy demos that hide the data pipeline behind a clean UI

- “One-click BI” claims that don’t explain definitions, lineage, or access control

What I save and share

- Anything about latency improvements for production inference

- Open source releases that make data validation or observability easier

- Real-time analytics and streaming features that reduce “dashboard lag”

- Notes on model risk, privacy, or audit trails (especially for BI)

My quick sanity checklist

Before I post an AI update in our team channel, I ask:

- Does it reduce latency?

- Does it reduce cost?

- Does it reduce risk (security, compliance, hallucinations)?

- Or does it just look cool?

Small confession: I still fall for flashy demos—until my second question is, “what’s the data backbone?” If the answer is vague, I move on.

Wild card: the stand-up I expect to see

“Agent, why did you change the KPI?”

“Because the dashboard definition was ambiguous.”

“Compliance says ambiguity is a risk. Show lineage.”

“I can—if you approve the data contract.”

2) Generative AI grows up: data quality becomes the plot twist

In the latest Data Science AI News cycle, I’m noticing a shift: generative AI is being used less for “writing stuff” and more for cleaning, labeling, and stress-testing data. That feels like a sign the space is growing up. When teams move from demos to real BI, the biggest risk is not the model—it’s the messy dataset feeding it.

Why I’m seeing GenAI move into classification and inconsistency detection

In practice, I get more value when GenAI helps me sort and sanity-check data than when it drafts paragraphs. It can:

- Auto-classify tickets, survey comments, and product events into consistent categories

- Flag duplicates, outliers, and “this doesn’t match the schema” records

- Detect label drift (same concept, different names) across teams and tools

A mini-story: one mislabeled metric, one week lost

I once shipped a BI + AI dashboard where a key metric was mislabeled. The number was correct, but the label implied a different definition. That tiny mismatch triggered a week of debate: marketing blamed tracking, product blamed the model, and leadership questioned the strategy. If we had basic data quality checks—definition tests, naming rules, and metric lineage—we would have caught it before anyone saw the chart.

Where data quality checks fit (before everything)

My current rule is simple: quality gates come before RAG, before dashboards, before fine-tuning. I like this order:

- Validate sources (freshness, completeness, schema)

- Standardize definitions (metrics, dimensions, labels)

- Run inconsistency detection (GenAI + rules)

- Only then: build RAG indexes, BI layers, or training sets

What I’m watching next: decoupled observability

I’m watching for decoupled observability: monitoring that separates data issues from model behavior, so bad inputs don’t hide behind confident outputs.

3) Smaller models, bigger impact: edge devices and real time wins

I used to assume “smaller models” meant “worse models”—then I watched a domain model beat a general one on a narrow task. In practice, a compact model trained (or tuned) for one workflow can be faster, cheaper, and more accurate than a giant model that tries to know everything.

Why edge inference is becoming the sensible default

From what I’m seeing in Data Science AI News, more teams are pushing inference closer to where data is created—phones, sensors, factory PCs, and on-prem boxes. The reasons are simple:

- Cost: fewer cloud tokens, fewer API calls, and less data transfer.

- Latency: real-time apps can’t wait on network hops and queue time.

- Data sovereignty: sensitive data stays local, which reduces compliance risk and exposure.[2]

Small language models + hybrid computing vs. cloud-only LLM calls

When I compare approaches, hybrid setups often win: run a small language model on-device for routine tasks, then “escalate” to the cloud only when needed. Cloud-only can look cheap at first, but the bill quietly grows as usage scales.

| Approach | Best for | Trade-off |

|---|---|---|

| Small model + edge/hybrid | Fast, frequent, local decisions | More deployment work |

| Cloud-only LLM | Complex, occasional requests | Latency + variable cost |

Unpopular opinion: predictable beats “creative” in real time

For real-time BI alerts, anomaly flags, or safety checks, I still reach for traditional AI (rules, forecasting, classifiers). Generative AI is powerful, but predictable behavior matters more than clever phrasing.

“Use the smallest model that reliably solves the job—then scale up only when the task demands it.”

4) Open source AI in 2026: the messy, necessary middle

In 2026, open source AI still feels like a neighborhood potluck: amazing variety, and the occasional food poisoning. That’s not a knock—it’s reality. The upside is speed and choice. The downside is that governance matters more than ever, especially when models move from demos into data-driven BI workflows.

What I’m seeing in the open source AI news cycle

From the latest Data Science AI News updates, three patterns keep showing up: global model diversification, more serious interoperability standards, and hardened governance as teams try to reduce risk while keeping innovation open.[2]

- Global diversification: more strong models trained outside the usual hubs, tuned for local languages and rules.

- Interoperability: better support for shared formats, model packaging, and toolchains that let me swap components without a full rebuild.

- Governance: clearer policies for releases, safety notes, and responsible use—still uneven, but improving.[2]

My practical checklist before I adopt an open source model

Before I put an open model anywhere near production analytics, I run a simple checklist:

- Licensing: Can I use it commercially? Are weights, data, and outputs covered?

- Evals: Do I have task-based tests (accuracy, bias, hallucinations) that match my BI use case?

- Security posture: Supply chain, signed artifacts, vulnerability history, and safe defaults.

- Maintenance: Who fixes issues when hype moves on—an active community, a foundation, or one tired maintainer?

Small tangent: why “open source” debates get emotional

I’ve learned these arguments aren’t just about tooling. For many teams, “open source AI” is identity: control, trust, and fairness. That’s why discussions about “open weights” vs “fully open” can feel personal, even when the real question is operational risk.

5) Physical AI and robotics AI: when software meets gravity

In the latest Data Science AI News cycle, Physical AI is the part that makes me sit up—because the real world doesn’t accept “almost correct.” A chatbot can be vague and still feel helpful. A robot that is vague drops a box, clips a shelf, or stops a line. When software meets gravity, errors become visible, measurable, and expensive.

Why robotics AI is rising now

I’m seeing more attention shift toward action + sensing, not just text. One reason is simple: we’re hitting diminishing returns from scaling large language models, so teams look for new gains in perception, control, and planning in real environments.[2] That means better vision models, better simulation-to-real transfer, and tighter feedback loops between sensors and decisions.

The BI AI twist: performance is a data quality problem first

Here’s where data-driven BI connects directly to robotics: measuring robot performance is often a data quality problem before it’s an “AI” problem. If my telemetry is wrong, my dashboards lie.

- Sensor drift: cameras and IMUs slowly change, so “normal” readings shift.

- Label noise: human annotations for defects, obstacles, or grasp success can be inconsistent.

- Environment shifts: lighting, floor friction, and layout changes break yesterday’s model.

In Physical AI, the KPI isn’t just accuracy—it’s safe, repeatable behavior under changing conditions.

Wild card scenario: agentic ops for warehouses

I can imagine an agentic system coordinating a fleet of warehouse robots like a tiny operations manager: assigning picks, rerouting around congestion, and negotiating battery schedules so chargers don’t become a bottleneck. The BI layer would track cycle time, near-misses, charge utilization, and “exceptions per shift,” turning robotics into a living, data-driven process.

6) Healthcare AI and the ‘health gap’: from symptom triage to treatment planning

I’m optimistic about healthcare AI, with a caveat: it’s only as good as the data quality and the workflow it respects. In the latest Data Science AI News updates, I keep seeing the same shift—tools are moving from lab demos to products that real people use when they feel sick, worried, or stuck in a long wait for care.

What’s changing: triage and planning are becoming consumer products

Symptom triage and treatment planning are no longer just research prototypes. They’re showing up as front-door experiences in apps, portals, and chat-based assistants, often powered by generative AI and paired with data-driven BI dashboards for clinicians. That matters because it can reduce friction: faster intake, clearer next steps, and better routing to the right level of care—especially in underserved areas.[3]

A personal-ish note: uncertainty needs to be honest

I’ve watched friends get conflicting advice from different symptom checkers—one says “urgent,” another says “rest and fluids.” The next wave of healthcare AI has to handle uncertainty openly. I want systems that say what they know, what they don’t, and what would change the recommendation (for example, “if fever lasts > 48 hours, seek care”).

“Healthcare AI should communicate uncertainty, not hide it behind confident wording.”

Where I see risk: explanations, bias, and the health gap

- Model explanations: clinicians and patients need simple reasons, not black-box outputs.

- Bias: uneven training data can misread symptoms across age, sex, race, and language.

- The health gap: if the best AI tools ship first to well-funded systems, outcomes may diverge.

For me, the goal is practical: AI that fits real clinical workflows, improves access, and stays accountable when the data is messy.

7) AI infrastructure: hybrid architecture, AI SRE, and the inference era

When I look back at 2023–2024, the big question was: can we train it? In 2026, the question feels more practical: can we run it reliably on Monday at 9am? That shift matters because most real business value now comes from inference, not flashy training demos. “Data Science AI News” has tracked how teams are moving from one-off GPU projects to repeatable AI infrastructure that behaves like any other critical service.

Hybrid architecture becomes the default

To keep costs and latency under control, I see more hybrid setups: some workloads stay in cloud, some move on-prem, and some sit close to users at the edge. At the same time, infrastructure is evolving toward dense, distributed “superfactories” and even hybrid quantum–AI–supercomputing approaches to squeeze more efficiency out of compute and energy budgets.[3]

AI SRE shows up when inference dominates

Once inference traffic becomes steady and high-volume, the operational reality hits. This is where AI SRE (site reliability engineering for AI) becomes real work, not a title. I also notice more focus on decoupled observability: monitoring that is not tied to one vendor stack, so teams can track models, GPUs, and services across environments.[4]

- Reliability targets: latency SLOs, error budgets, and capacity planning for peak hours

- Model health: drift checks, prompt/response logging policies, and rollback paths

- Cost control: batching, caching, quantization, and smart routing across models

My take: infrastructure is now a product

In my experience, AI infrastructure only works when it is treated like a product: it needs a budget, an owner, on-call rotations, and “boring” runbooks that explain what to do when GPUs fail, queues back up, or a model update breaks outputs.

Conclusion: My ‘release notes’ mindset for ai tech in 2026

After following Data Science AI News: Latest Updates and Releases, I’m ending 2026 with a simple pattern I’m betting on: data quality will matter more than flashy demos, smaller models will win more real workloads, open source governance will decide what teams can safely adopt, and we’ll see faster progress in physical AI (robots, sensors, edge devices) and healthcare AI (clinical workflows, imaging, triage). Under all of it, infrastructure maturity—better inference stacks, monitoring, and cost controls—will turn “cool” into “shippable.”

To stay sane, I’m keeping one practice: I write my own release notes after reading AI news. I don’t copy headlines. I capture three lines: what changed (new model, new benchmark, new policy), what I’ll test (one small experiment in my stack), and what I’ll ignore (anything that can’t explain its data, evaluation, or deployment path). This keeps generative AI and data-driven BI grounded in outcomes, not hype.

My wild card is this: imagine agentic AI running an internal newsroom for your company. It scans model updates, open source releases, and BI changes—but it only publishes an “update” if it survives a data quality audit: lineage is clear, metrics are reproducible, and the impact is measurable. That kind of filter would make open source and enterprise AI feel less noisy and more trustworthy.

If you take one thing from this conclusion, let it be an invitation: pick one trend—smaller models, open source governance, physical AI, healthcare AI, or infrastructure maturity—and run a two-week experiment. Write your own release notes at the end. Results beat hot takes.

TL;DR: In 2026, the most meaningful data science AI news isn’t just bigger generative AI—it’s better data quality, smaller models on edge devices, stricter open-source governance, physical AI/robotics momentum, healthcare AI moving from triage to planning, and infrastructure shifting toward inference-heavy, hybrid architectures.

Comments

Post a Comment