AI Trends 2026: Notes From Data Science Leaders

Last month I re-listened to an “expert interview” episode while stuck in a delayed train car with spotty Wi‑Fi—ironically the perfect setting to think about what AI can’t magically fix. When the leaders on the recording kept circling back to unsexy topics like data quality and infrastructure, I caught myself nodding a little too hard. I’ve shipped enough dashboards and models to know the pattern: the flashy demo gets the budget, but the boring parts decide whether anything survives Monday morning. So I rebuilt my notes into a field guide for AI trends 2026—sprinkled with the kinds of side-comments I’d normally only admit in a team retro.

1) My “hype vs. Monday” test for AI trends 2026

I have a simple litmus test for AI trends 2026: if it doesn’t help on a chaotic Monday, it’s probably just conference glitter. Mondays are when dashboards break, stakeholders want answers fast, and the “cool demo” meets messy reality. In the expert interview with data science leaders, the most useful ideas weren’t flashy—they were the ones that survived real operations.

From model obsession to systems thinking

One clear shift I heard (and felt in my own work) is that teams are moving from “Which model is best?” to “Which system is reliable?” That means tools, workflows, and governance matter as much as accuracy. A strong model inside a weak pipeline still fails. So when I evaluate AI trends 2026, I look for signs of systems thinking: monitoring, data contracts, human review, and clear ownership.

A quick tangent: the demo that died on Monday

I once watched a “perfect” demo fall apart in production because the data feed updated six hours late. The model was fine. The UI was polished. But the business needed near real-time analytics, and we were serving yesterday’s truth. That day taught me that latency is not a technical detail—it’s a product requirement.

Mini-checklist I stole from my own mistakes

Now I run every new AI trend through this quick checklist:

- Input quality: Do we trust the data, and can we prove it?

- Latency: How fresh does the data need to be to make decisions?

- Accountability: Who owns failures, approvals, and model changes?

- Cost to run: What’s the real price of compute, storage, and support?

My wild-card analogy

Building AI without data quality is like meal-prepping with spoiled groceries—technically efficient, practically tragic. You can automate the chopping, label the containers, and optimize the schedule, but the outcome is still bad. That’s why the leaders I interviewed kept circling back to data foundations and operational discipline as the real AI trends for 2026.

2) Generative AI grows up: from output to input (aka data quality)

In the Expert Interview: Data Science Leaders Discuss AI, what I heard repeatedly is that generative AI is growing up. It’s less about flashy text output and more about being a backstage worker: auto-classifying, spotting inconsistencies, and cleaning the mess before anyone sees it. In other words, the real win is often better inputs, not prettier outputs.

Generative AI as a data quality assistant

Leaders kept coming back to the same pattern: use generative AI to do the heavy lifting on messy, high-volume work, then use more traditional checks to keep it honest. This is where “AI trends 2026” starts to look very practical: the model becomes part of the data pipeline, not just a chat box.

A practical workflow: label tickets, then validate

A simple example I noted: using generative AI to label support tickets (topic, urgency, sentiment), then validating with rules or classic ML for edge cases. The goal is speed and control.

- GenAI labeling: classify each ticket into a standard taxonomy.

- Consistency checks: detect missing fields, conflicting tags, or odd phrasing.

- Rule/ML validation: catch edge cases (refund threats, legal terms, VIP accounts).

if ticket.contains("chargeback") then priority="P0"

The uncomfortable bit: quality checks shift left

One uncomfortable truth from these data science leaders: quality checks don’t go away. They shift left into the pipeline. Instead of reviewing model outputs at the end, teams invest earlier in data quality improvement: clearer schemas, better labels, stronger lineage, and repeatable tests.

Why smaller and domain models matter

I also heard why “smaller models” and domain models are winning: they’re cheaper to run, easier to evaluate, and less likely to hallucinate in narrow contexts. When the task is specific (like ticket routing), a focused model can be more reliable than a giant general one.

My rule of thumb: if you can’t trace an answer back to a dataset you trust, you don’t have “AI”, you have “vibes”.

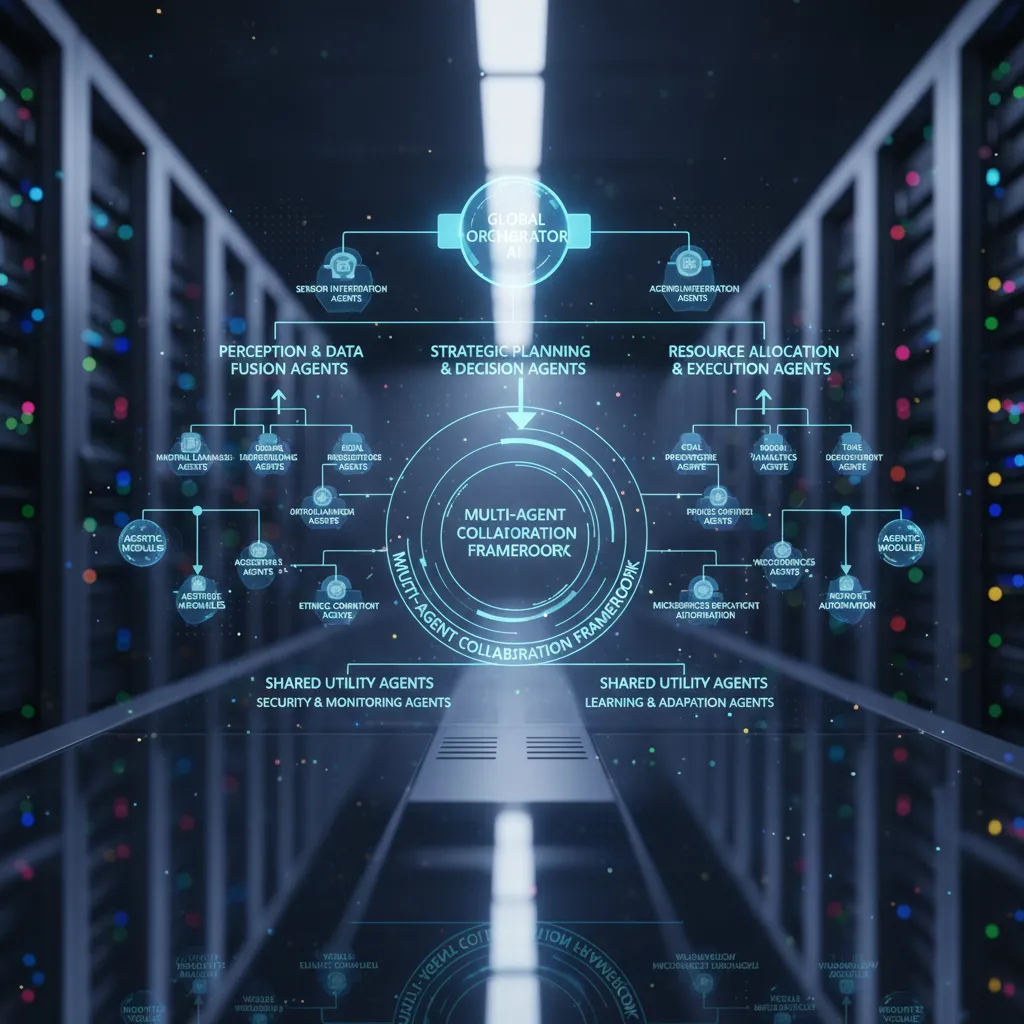

3) Multi-agent AI and agentic AI: the new org chart (but for software)

In the expert interview with data science leaders, one idea finally clicked for me: multi-agent AI makes more sense when I stop picturing “one smart bot” and start picturing a tiny team. I now imagine a planner who sets the steps, a retriever who pulls facts from tools and docs, a verifier who checks the output, and a “grumpy” risk checker whose job is to say no when something looks unsafe or off-policy.

Why multi-agent systems win in messy workflows

Where I think multi-agent AI and agentic AI really win is not single-shot Q&A. It’s the messy work: triage, handoffs, and decision trails. In real orgs, tasks bounce between people, and the “why” matters as much as the “what.” Multi-agent systems can mirror that flow by splitting responsibilities and leaving a clearer audit path.

- Triage: decide what matters and what can wait

- Handoffs: move work between roles without losing context

- Decision trails: record what was checked, by whom, and why

A simple agentic AI scenario (that feels real)

One leader described agentic systems as software that can take action, not just answer. A scenario I keep coming back to: an AI agent that negotiates meeting times, checks company policy (like travel rules or attendee limits), drafts the email, and logs the decision in the CRM or ticketing system. That’s not “chat.” That’s a workflow with responsibility.

“You’re not testing a model response anymore—you’re testing a system of roles.”

Why evaluation gets weird (I learned this the hard way)

I felt this firsthand on a chatbot + toolchain project. Accuracy was only part of the story. The hard bugs were about coordination: the planner calling the wrong tool, the retriever pulling stale data, or the verifier missing a policy edge case. Evaluation becomes about reliability across steps, not one final answer.

The open question I’m still chewing on: when do we stop calling these systems “assistants” and start calling them colleagues?

4) Edge AI reality + smaller models: when latency becomes a personality trait

Edge AI stopped feeling theoretical the first time I watched a model fail because the network dropped. The prediction was “right” in the cloud, but useless in the moment. After that, cloud-only stopped sounding modern and started sounding like a liability—especially for factories, retail devices, vehicles, and clinical tools where seconds matter.

What’s maturing in edge AI (for real)

From the leaders I interviewed, the shift is not just “run AI on devices.” It’s a more practical mix of:

- Smaller models that fit memory and power limits, often distilled or quantized.

- Domain-optimized inference (models tuned for one job, one sensor, one workflow).

- Data sovereignty choices: deciding what must stay on-device, what can sync later, and what never leaves a site.

Trade-offs I’d actually put in writing

Edge deployments force honest trade-offs. I like to document them early so nobody is surprised later:

- Latency vs accuracy: the fastest model may be “good enough,” and that’s the point.

- Cost vs maintainability: cheaper hardware can raise long-term support costs.

- On-device privacy vs update cadence: keeping data local is great, but updates get harder when devices are scattered.

A quick aside on open-source AI

I love open-source AI here because you can inspect what the model does, slim it down for edge constraints, and fine-tune without waiting for an API vendor to add a feature. That control matters when your “deployment target” is a kiosk, a camera, or a handheld scanner.

Practical checklist for edge deployments

- Monitoring: device health, drift signals, and inference latency.

- Rollback plan: versioned models and a safe fallback behavior.

- Offline clause: write down “what happens offline?” and test it.

On the edge, latency isn’t just a metric—it becomes part of the product’s personality.

5) AI infrastructure and quantum computing: the boring stuff that decides the bill

In the interviews, leaders kept circling back to the same point: AI infrastructure is strategy, not IT housekeeping. I used to treat compute, storage, and networking like background noise. Now I hear it as a business decision: what we can build, how fast we can ship, and what we can afford to run every day.

What “denser, hybrid systems” means in plain language

When they said “denser, hybrid systems,” they weren’t trying to sound fancy. They meant stacking the right tools together so workloads land on the best hardware at the best time. In practice, that looks like:

- Mixing CPUs, GPUs, and accelerators so training and inference don’t fight for the same resources.

- Smarter scheduling (queueing, autoscaling, priority rules) so urgent jobs don’t wait behind experiments.

- Specialized hardware when it truly lowers cost or latency, not because it’s trendy.

For “AI Trends 2026,” this is the quiet shift: infrastructure choices are becoming product choices.

Where quantum computing fits (for now)

Quantum came up, but not as a magic replacement for GPUs. The tone was cautious. Most leaders described it as experiments aimed at efficiency or accuracy—more lab bench than production line. I heard use cases like optimization and sampling, but always with a big asterisk: integration, tooling, and reliability still limit real-world deployment.

“It’s promising, but it’s not where we run our core models today.”

Real-time analytics is the pressure test

Real-time analytics kept showing up as the stress case. If your data pipelines can’t ingest, clean, and serve features fast, then no agentic AI can “think” quickly enough to help. Latency isn’t just a metric; it’s a ceiling on automation.

My budgeting confession: I now track cost per decision

I used to undercount inference costs. Training was the headline, but inference was the monthly bill. Now I track cost per decision like it’s rent:

- tokens or calls per outcome

- latency targets vs. hardware spend

- cache hit rate and reuse

6) Where it gets human: AI healthcare + AI research (and my cautious optimism)

From the interviews, AI in healthcare is the first place I see “AI as partner” feel real, not theoretical. It shows up in practical workflows: symptom triage that helps route patients faster, treatment planning that surfaces options clinicians may want to review, and tools that help close care gaps by spotting missed follow-ups or overdue screenings. This is not magic. It’s pattern recognition plus better coordination—two things healthcare badly needs.

At the same time, AI research is getting quietly supercharged. Leaders described systems that can help generate hypotheses, suggest next experiments, and even support “lab-assistant” behaviors. I’m watching three areas closely:

- Hypothesis generation: turning messy literature into testable ideas

- Experiment control: helping tune protocols and track variables

- Lab-assistant behaviors: documenting steps, checking constraints, reducing human slip-ups

My ethical speed bump is simple: the faster systems move, the more we need explicit guardrails. Yes, AI ethics still matters even when nobody wants another policy doc. In healthcare and research, speed can amplify harm: biased triage, overconfident recommendations, or automated actions that no one can fully explain. Guardrails should be boring and specific—clear approval steps, audit logs, and “stop” rules when data quality drops.

A “what if” scenario I can’t stop thinking about

Imagine a multi-agent AI lab assistant that proposes an experiment, orders reagents, runs checks, then flags anomalies in real time. One agent reads papers, another drafts the protocol, another monitors instruments, and another watches for safety and compliance. The lab moves faster, but only if humans stay in the loop with clear authority.

How I explain this to a non-technical friend

It’s not replacing experts; it’s reducing the blank-page moments and the clerical grind.

That’s my cautious optimism: AI trends in 2026 feel most valuable when they support people doing high-stakes work, not when they try to remove them.

Conclusion: My 2026 ‘boring-first’ AI playbook

On that delayed train, the lesson was simple: systems win, not slogans. Every leader I spoke with had a different stack and a different budget, but the same pattern showed up again and again. The teams that shipped value did not chase the newest demo. They built a steady path from messy reality to reliable outcomes.

The through-line from these interviews is clear. It starts with data quality, because even the best model cannot fix missing fields, unclear labels, or broken handoffs. From there, the conversation moves to agentic AI coordination: not “agents everywhere,” but careful choices about when an agent should plan, call tools, and hand work back to a human. Then comes the edge AI reality, where latency, privacy, and device limits force trade-offs that cloud-only thinking ignores. None of this works without AI infrastructure—monitoring, evaluation, versioning, and cost controls—so the system stays stable after launch. And finally, it lands in real domains like AI healthcare and AI research, where safety, audit trails, and clear responsibility matter more than clever prompts.

My practical next step for 2026 is boring on purpose. I’m going to pick one workflow, map the data quality failures end to end, and write down where time is lost or decisions go wrong. Only then will I decide if AI agents help—or if traditional AI is enough. If a simple classifier, rules, or a dashboard solves it, I’ll take the win and move on.

One wild card I’m keeping: try explaining your AI stack to your future self in three sentences. If you can’t, it’s too complicated.

To keep myself honest, I’m building a personal “Monday test”: what breaks first when the week starts, who notices, and how fast we can recover. I’d love for you to build your own Monday test and compare notes—because in 2026, the boring-first teams will be the ones still standing.

TL;DR: AI trends 2026 feel less like “bigger models everywhere” and more like “smarter systems with better inputs.” Expect generative AI to focus on data quality, agentic AI and multi-agent AI to handle workflows, edge AI to mature via smaller models, and AI infrastructure (even quantum computing experiments) to become a competitive lever—especially in AI healthcare and AI research.

Comments

Post a Comment