Enterprise AI in 2025: What I’m Seeing Now

I didn’t realize Enterprise AI had “grown up” until a finance lead stopped asking me what an LLM is and started asking which cost center should own it. That’s the vibe in 2025: fewer demos, more invoices; fewer moonshots, more Production Models. In this post I’m walking through the State of AI as I’m experiencing it—where Generative AI spending is piling up, what’s changing in AI Infrastructure (yes, Vector Databases are still everywhere), and which teams are quietly winning with Departmental AI. I’ll share the stats that stuck with me, a couple of candid hallway conversations, and one hypothetical scenario I keep using to sanity-check AI ROI before anyone signs another annual commitment.

1) My quick “State of AI” gut-check (and why it matters)

The moment my calendar flipped from “AI brainstorm” to “AI cost review” meetings, I knew the State of AI had changed. Not because people stopped caring—because Enterprise AI stopped being a novelty. In 2025, AI has moved from innovation theater to operational plumbing.

When I say the State of AI in 2025, I mean this: less hype, more operational muscle. The questions I hear now are simple and sharp: What are we paying for? What’s in production? What’s the AI ROI?

Satya Nadella: “AI is the defining technology of our times.”

I’m grounding this gut-check in reported 2024–2025 stats and projections to 2030 from sources such as Menlo Ventures, Stanford AI Index, Databricks, McKinsey, OpenAI, Deloitte, Glean, and Unframe.

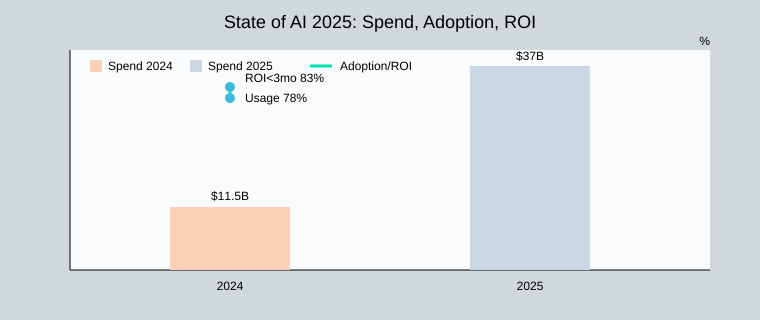

Three signals I watch across AI Trends

- AI Spending: Generative AI budgets are no longer “experiments.” Companies spent $37B on Generative AI in 2025, up 3.2x from $11.5B in 2024. That kind of jump forces governance, vendor scrutiny, and cost controls.

- Production models in real workflows: I look for models tied to tickets, claims, calls, code reviews, and finance close—not demos. “Works in a notebook” doesn’t count.

- AI Adoption beyond one champion: In 2024, 78% of organizations used AI. The real test is whether usage spreads past one team into shared platforms, shared prompts, and shared metrics.

A mild tangent: “pilot” is now a smell test

When someone says “pilot,” I ask for three things: a named owner, a production path, and a measurable payback window. If they can’t answer, it’s not a pilot—it’s a stall. The graceful way to retire “pilot” is to rename it: time-boxed production trial, with a cost cap and a go/no-go date. That matches what the data suggests anyway: in 2024, 83% of AI-implementing organizations saw positive ROI within 3 months.

| Metric | Value |

|---|---|

| Generative AI spending (2024) | $11.5B |

| Generative AI spending (2025) | $37B |

| Organizations using AI (2024) | 78% |

| Positive AI ROI within 3 months (2024) | 83% |

2) Follow the money: AI Spending shifts from infra to apps

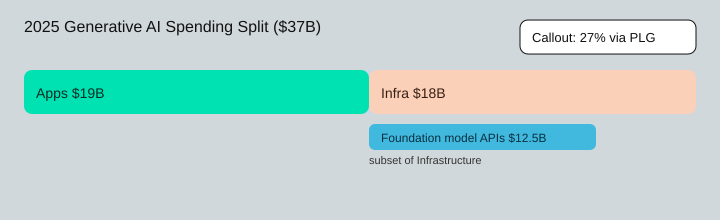

In 2025, what surprised me about AI Spending wasn’t the total—it was the split. The 2025 Generative AI total hit $37B, but the application layer captured $19B, edging out AI Infrastructure at $18B. That tells me the AI Market is moving from “build the engine” to “ship the product.”

Apps took the lead, and buying behavior changed

When apps take over half of spend, budgets show up in more places: IT still pays for platforms, but product teams fund copilots, and departments (sales, support, finance) buy tools that map to their KPIs. I’ve felt this shift in negotiations: one week I’m debating seat-based pricing for copilots (“How many users?”), and the next I’m staring at a usage dashboard asking, “Why did LLM usage spike on Tuesday?”

Infrastructure is still huge: Foundation model APIs are the new utility bill

Even with apps leading, infra remains a major cost center because foundation model APIs are now a recurring line item. Of the $18B infra spend, $12.5B is specifically on Foundation Models via APIs. Andrew Ng’s line keeps coming to mind:

“AI is the new electricity.”

In practice, that means I treat API spend like power: measurable, variable, and worth optimizing.

PLG is disproportionately important in AI purchasing

Another signal: 27% of AI spend flows through product-led growth (PLG), about 4x traditional software. Teams try tools fast, prove value, then procurement catches up.

My practical takeaway: map spend to value moments

- Value moment: ticket deflection → track cost per resolved case (not model type).

- Value moment: faster drafting → compare seat costs vs saved hours.

- Value moment: better search → monitor API calls per successful answer.

| Metric (2025) | Value |

|---|---|

| Generative AI total spend | $37B |

| Application layer spend | $19B |

| Infrastructure spend | $18B |

| Foundation model API spend (subset of infra) | $12.5B |

| AI spend via PLG | 27% (~4x traditional software) |

3) Shipping reality: Production Models, RAG, and the Vector Database boom

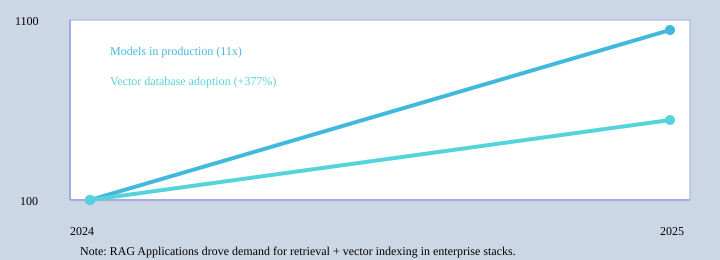

The quiet headline I keep seeing in 2025 is this: organizations are putting 11x more AI models into production than they did a year ago. That shift matters because Production Models are not demos. A prototype answers “can this work?” Production answers “can this run every day, with real users, real data, and real risk?” This is where AI Infrastructure stops being a slide and becomes an on-call job.

Jensen Huang: “AI is the new industrial revolution.”

Why RAG Applications became the default (and when I avoid it)

RAG Applications won because they let teams ship useful features without retraining: connect a model to trusted documents, retrieve the right context, then generate. It’s fast, auditable, and usually cheaper than fine-tuning. I avoid RAG when the task needs strict determinism (like pricing rules), when the knowledge is tiny and stable (a simple lookup wins), or when Data Quality is messy enough that retrieval just amplifies confusion.

Vector Databases grew 377%—and you can see it in diagrams and budgets

That 377% growth shows up as a new “vector layer” in architecture diagrams: ingestion pipelines, embedding jobs, index rebuilds, and access controls. The first time a vector index corrupted on me, the team treated it like a database incident—because it is. Backups, monitoring, and recovery plans suddenly became non-negotiable.

My checklist for Production Models

- Latency: p95 and p99, not just averages

- Evals: automated tests for quality, safety, and regressions

- Data Quality: freshness, duplicates, permissions, and drift

- 2 a.m. plan: alerts, rollback, rate limits, and human escalation

When teams productionize, AI productivity improves: less rework, more consistent outputs, and fewer “it worked in the notebook” surprises.

| Metric (2024–2025) | Reported change |

|---|---|

| Models moved into production | 11x increase |

| Vector database adoption | 377% growth |

4) Departmental AI vs Vertical AI: where adoption feels most “real”

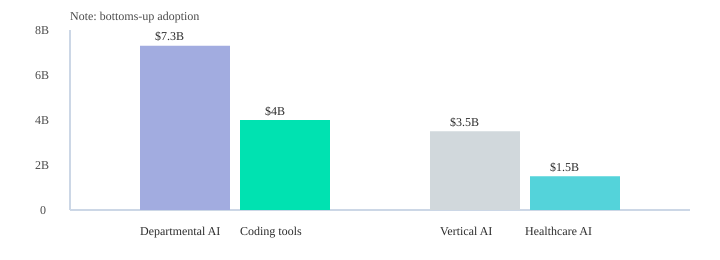

In 2025, the most “real” AI Adoption I see is not the big, top-down program. It’s Departmental AI—now about $7.3B in spend—because productivity feels personal. A manager can buy a tool, prove ROI in weeks, and expand without waiting for a committee.

Departmental AI ($7.3B): small teams move faster than big programs

Department-led purchases dominate several high-ROI use cases, especially coding. Coding tools alone are about $4B, and I keep seeing dev teams adopt them quietly, then “officially” later. I once watched an awkward code review where the AI wrote a clean function, but the developer couldn’t explain it. The lesson wasn’t “ban the tool.” It was: require comments, tests, and ownership.

Fei-Fei Li: “There’s nothing artificial about AI.”

Vertical AI ($3.5B): Healthcare AI ($1.5B) proves regulated can still move

Vertical AI hit $3.5B, and Healthcare AI leads at $1.5B. Regulated industries moved anyway because the workflow is already structured: intake, coding, prior auth, clinical notes, audit trails. Procurement looks different too—more security reviews, more vendor due diligence—but the buying decision is still tied to a specific workflow owner.

A quick heuristic I use: “Who owns the workflow?” beats “Who owns the model?”

Here’s my simple test. If a team owns the workflow end-to-end, they can adopt fast. If no one owns it, you get a “platform” that nobody uses.

- Hypothetical win: a claims analyst uses a RAG assistant to cite policy text and draft decisions.

- Hypothetical miss: a massive enterprise chatbot launches, but no department updates content or measures outcomes.

| Category | 2025 Spend |

|---|---|

| Departmental AI | $7.3B |

| Coding tools (subset) | $4B |

| Vertical AI | $3.5B |

| Healthcare AI (subset) | $1.5B |

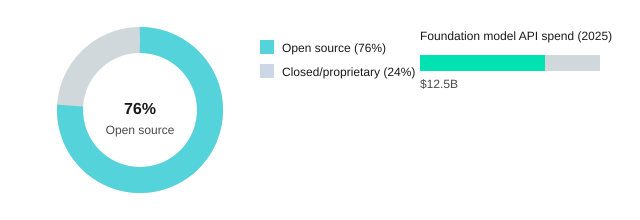

5) Open source, Foundation Models, and the “build vs buy” mood swing

In my Enterprise AI work this year, Open Source has become the default for a lot of real LLM usage. The number I keep hearing matches what I’m seeing: 76% of LLM users choose open source models. Inside enterprises, that usually means a small “model platform” team curates a short list (two or three models), sets guardrails, and gives product teams a safe path to ship. The motivations are practical: lower unit costs at scale, more control over customization, and clearer governance when you can inspect and pin versions.

Why “buy” still wins a big chunk of AI Infrastructure spend

Even with open source momentum, Foundation Models delivered via API still dominate budgets. In 2025, infrastructure spending was about $18B, including $12.5B on foundation model APIs. That’s why hybrid stacks are common: teams prototype with an API model, then move steady workloads to private deployments when the math stops working. I watched one team do exactly this—after a successful pilot, their per-call costs climbed fast with daily usage. They kept the API for edge cases (rare languages, peak load), but shifted the core workflow to an open model hosted internally for predictable spend and tighter data handling.

My unpopular opinion: model choice matters less than discipline

I care less about which model “wins” and more about evaluation discipline and data contracts: what data can be used, where it can go, how long it’s stored, and how outputs are tested. That’s the difference between demos and durable Enterprise AI.

Demis Hassabis: “We’re building AI systems that can help us solve some of the biggest challenges.”

Ingredients vs appliances

- Ingredients: open models you can tune, host, and combine—more work, more control.

- Appliances: API Foundation Models—fast to use, but you pay for convenience.

| Metric | Value |

|---|---|

| LLM users choosing open source models | 76% |

| 2025 foundation model API spending | $12.5B |

6) Outcomes and the messy human layer: AI Productivity, AI ROI, and jobs

AI Productivity: what employees report (and why I believe them, mostly)

In my conversations, AI Productivity is real at the individual level. The data matches what I hear: 75% of workers say AI improves work speed or quality. I believe it—mostly—because the gains show up in small, repeatable moments: faster first drafts, cleaner summaries, better search, fewer “blank page” starts. But I also saw the messy part early on: the first time a teammate admitted they were embarrassed to use a copilot tool, worried it would look like cheating. That’s why AI Adoption is not just tools; it’s trust.

AI ROI and Business efficiency: what companies report (and where it plateaus)

On the business side, 64% of businesses report productivity gains. I’ve seen Business efficiency improve fastest in support, sales ops, and internal knowledge work. The plateau usually hits when teams can’t measure outcomes, or when governance is unclear: people either over-use AI (risk) or under-use it (fear). AI ROI becomes visible when leaders tie use cases to cycle time, quality checks, and cost-to-serve—not just “hours saved.”

Workforce Impact: how I talk about jobs without panic

The near-term Workforce Impact is uncomfortable: by 2025, AI is expected to replace 16% of jobs and create 9% new roles (net -7%). I don’t frame this as doom. I frame it as a planning problem: reskilling, role redesign, and clear pathways into new work (AI ops, model risk, prompt QA, domain trainers).

Reid Hoffman: “AI will amplify human capability, not replace it.”

My closing practice: an “AI user manual” for teams

- Permissions: what data is allowed, what is not

- Expectations: where AI is encouraged vs. optional

- Escalation paths: how to report errors, bias, or leakage

| Metric | Value |

|---|---|

| Workers reporting improved speed/quality | 75% |

| Businesses reporting productivity gains | 64% |

| AI job change by 2025 (replace 16%, create 9%) | Net -7% |

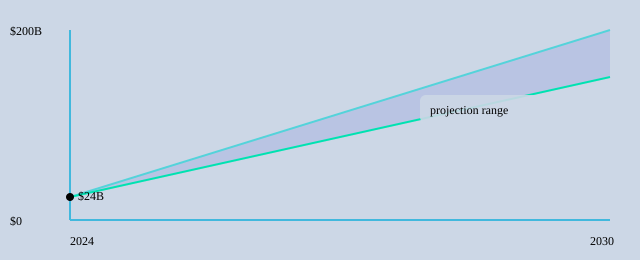

7) The AI Market runway: from 2024 reality to 2030 projections (and my bet)

AI Market growth: what must be true

When I look at AI Market growth, I start with the hard numbers: the Enterprise AI market was about $24B in 2024, and it’s projected to reach $150B–$200B by 2030. That kind of AI Growth only happens if a few things become normal inside big companies: AI moves from pilots to standard tools, security and governance get boring (in a good way), and teams can ship changes weekly—not yearly. It also means procurement gets simpler, and leaders fund AI like they fund cloud: as a core operating line, not a special project.

Sundar Pichai: “AI will be more profound than electricity or fire.”

AI Trends: “agentic” features are already here

One of the clearest AI Trends I’m seeing in Enterprise AI 2025 is that Agentic AI is creeping in even when nobody uses that label. It shows up as tools that can take the next step: draft the email, open the ticket, update the CRM field, route the approval, and follow up. The “agent” isn’t a robot coworker; it’s a chain of small actions that saves minutes hundreds of times a day.

My bet: 1,000 micro-automations beat 1 giant assistant

The scenario I use is a 2025 enterprise that runs 1,000 micro-automations across finance, support, sales ops, and HR instead of betting everything on one giant assistant. Each automation is narrow, measured, and owned by a team. Together, they create compounding time savings and cleaner data, which then makes the next automation easier to ship.

| Year | Enterprise AI market size |

|---|---|

| 2024 | $24B |

| 2030 (projected) | $150B–$200B |

So my conclusion on the State of Enterprise AI in 2025 is simple: it’s a budgeting story, a shipping story, and a people story—at the same time. If the projections are even close, AI is becoming a second nervous system for the company. This week, audit one workflow, add one small automation, and measure the time saved.

TL;DR: Enterprise AI in 2025 is defined by spending moving to apps, a jump in Production Models, open source dominance for LLM usage, and faster-than-expected ROI—while workforce and governance questions intensify.

Comments

Post a Comment