Digital Transformation Trends 2026: Leading with AI

Last year I watched a “simple” chatbot pilot quietly mutate into a cross-functional fire drill: legal wanted audit trails, finance wanted cost predictability, ops wanted latency guarantees, and the frontline team just wanted it to stop hallucinating policy. That week taught me something I didn’t learn from conference keynotes—leading digital transformation in the AI era isn’t about picking a model. It’s about redesigning how work, platforms, and decision rights flow. In this post, I’m stitching together the patterns I keep seeing (and a few I didn’t expect), from multi-agent systems in the enterprise to cloud-edge intelligence and the weirdly practical rise of physical AI.

My messy definition of “AI-era” transformation (and why it’s not just LLMs)

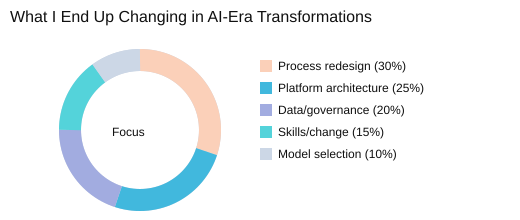

When I track digital transformation trends for 2026, I use a simple rubric: if the workflow changes, the org chart changes too. That’s my shorthand for AI digital transformation—not a tool rollout, but a shift in who decides, who approves, and who monitors as work moves from routine execution to oversight.

Where Large language models fit (and where they distract)

Large language models are great for drafting, search, and “glue work” across systems. But they become expensive noise when teams skip process redesign and try to “chatbot” broken handoffs. I’ve seen pilot scope creep fast: legal, finance, ops, and frontline all show up with valid needs—and suddenly the pilot is a program.

Why Real-time AI reasoning breaks old stacks

In Technology innovation 2026, the hard part is not prompts—it’s architecture. Real-time AI reasoning and multimodal inputs push legacy stacks until they collapse under latency, orchestration, and data access demands. That’s why AI-native platforms and AI-first architecture are replacing “add-on AI” patterns. The research trend is clear: training in cloud, inference at the edge, with hybrid control.

A small tangent: one latency spike taught me more than an AI keynote. The model was fine; the pipeline wasn’t. Governance, incentives, and platform choices are the real system.

Satya Nadella: "The real promise of AI is not a smarter tool, but a new way of building systems that adapt while we work."

- Rewrite processes

- Fix data + governance

- Modernize platforms

- Build skills + change habits

| Item | Value |

|---|---|

| Pilot scope creep events | 4 stakeholders (legal, finance, ops, frontline) |

| Key shift timeline | 2026 as planning horizon |

| Model focus split | Training in cloud vs inference at edge |

Multi-agent systems enterprise: when one model becomes a team

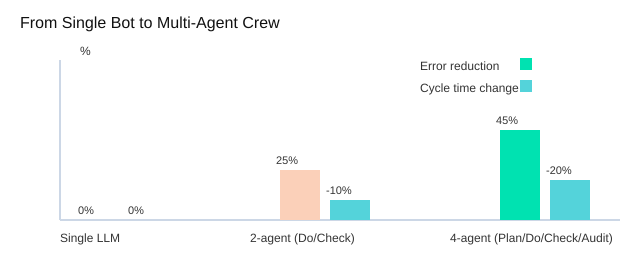

I used to ask for “a chatbot.” Then I watched it over-promise, miss context, and ship errors. That’s when I started asking for a crew: a planner, a doer, and a checker—now a full Multi-agent systems enterprise pattern.

Why AI agents workflows beat simple automation

In Multi-agent systems, work is delegated, remembered, and verified. Workflow orchestration AI routes tasks between agents, while vector databases add “memory + retrieval” so agents can cite past decisions and docs. The key shift in Agentic workflows transformation is the loop: plan → execute → validate → audit.

- Planner: breaks goals into steps

- Executor: runs actions across tools

- Validator: checks facts, policy, and outputs

- Auditor: logs, samples, and flags drift

What I delegate vs what I keep

I delegate drafting, research, and ticket triage to AI agents productivity stacks. I keep final approvals, pricing, and anything with legal impact. Early on, I over-automated replies—and had to clean up tone and wrong commitments.

Risk controls I won’t skip

For Agentic AI systems, I require sandboxing, least-privilege permissions, and evaluation gates.

Cadence: weekly regression tests; monthly red-team review

Andrew Ng: "AI is the new electricity—its biggest impact comes when you redesign the processes around it, not when you bolt it on."

Hypothetical: inbox + browser + ERP in one dashboard

“Super agents” with multi-agent dashboards can read an email, verify terms in the browser, draft a reply, and create an ERP order—then the validator confirms totals before send.

| Insight | Data |

|---|---|

| Scale outlook | IDC: 45% of organizations orchestrating AI agents at scale by 2030 |

| 4-agent pattern | Planner 1, Executor 1, Validator 1, Auditor 1 |

| Ops practice | Weekly regression; monthly red-team |

Vertical AI industries: why the “boring” model wins in 2026

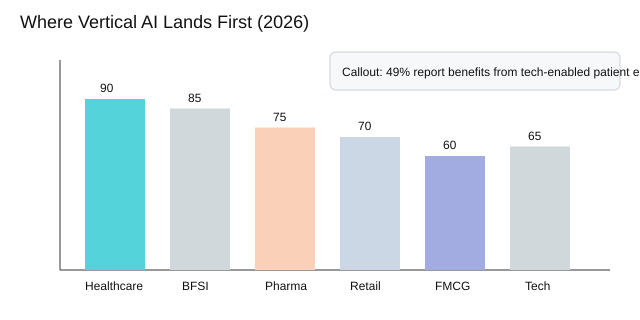

My unpopular opinion: Domain-specific AI tuning beats generic cleverness most days. In my work on Enterprise AI adoption, the wins come from “boring” Vertical AI models that know the rules, data, and risks of one field. That’s also where my AI tech trends predictions land for 2026: Vertical AI industries will outperform general-purpose models, with Healthcare and BFSI leading.

Fei-Fei Li: "The next wave of AI won’t be just smarter—it will be more useful, grounded in the realities of specific domains."

Where vertical AI shows up first (and why)

By 2026, I see adoption intensity ranking like this: Healthcare, BFSI, Pharma, Retail, FMCG, then Tech. These sectors have clear workflows, measurable outcomes, and strict governance needs.

A claims workflow story: accuracy over eloquence

In a claims automation pilot, the model’s writing style did not matter. What mattered was getting codes, policy rules, and exceptions right. A smaller model, fine-tuned on claims notes and reinforced with reviewer feedback, beat a larger generic model on accuracy and auditability.

How smaller models punch above their weight

With fine-tuning + reinforcement learning, efficient models can match or exceed giant models in narrow tasks—while being cheaper to run and easier to govern. Open-source AI diversification also helps: shared standards, interoperability, security-audited releases, and transparent data pipelines.

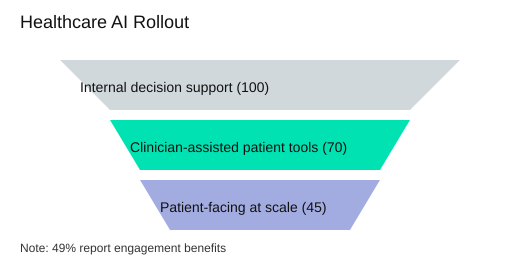

- Healthcare: patient triage + engagement (49% report benefits from tech-enabled patient engagement)

- BFSI: fraud detection + risk modeling

- Retail/FMCG: hyperlocal demand sensing

- Insurance/Pharma: claims automation + regulated documentation

| Item | Data |

|---|---|

| Adoption leaders (2026) | Healthcare, BFSI, Pharma, Retail, FMCG, Tech |

| Use-case map count | 5 (triage, claims, fraud, risk, hyperlocal demand) |

| Patient engagement benefit | 49% |

AI-native platform architecture: rebuilding the plane mid-flight

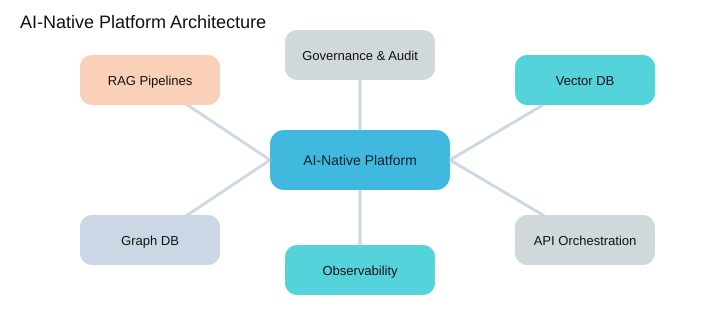

When I lead transformation work, I keep landing on the same AI-first architecture: four core parts—graph DB, Vector databases, RAG pipelines, and API-first orchestration. This AI-native platform architecture is replacing legacy stacks because it makes AI usable inside real workflows, not just demos.

Arvind Krishna: "The winners will be the enterprises that operationalize AI—embedding it into workflows with the right architecture and governance."

The stack I keep ending up with (4 components)

- RAG pipelines to ground answers in trusted content

- Vector databases for fast similarity search over documents

- Graph databases for relationships, lineage, and permissions

- API-first orchestration to connect tools, events, and agents

Graph databases vs vector stores: when each stops being optional

I use vector stores when “find the closest meaning” is the job. I reach for graph databases when “who can do what, with which data, and why” matters. At scale, both become required for AI-native platforms that must explain decisions and enforce access.

Integration changes: events, tools, and permissions become the product

In AI-native platforms, integration is not a side project. Events trigger agents, tools become callable APIs, and permissions are enforced in every step. That means AI infrastructure systems like observability, evaluation, model registry, and prompt/version control are dependencies—not add-ons.

My “two-speed” rule + one gripe

Prototypes move fast; platforms move safely. I set SLOs like p95 latency < 300ms for edge inference and audit log retention 365 days. My gripe: legacy ticketing can block agents for days, killing productivity and Hybrid cloud intelligence momentum—even as distributed networks and interconnected AI superfactories push efficiency forward.

| Item | Value |

|---|---|

| Core platform components count | 4 |

| Keyword targets | AI-native platforms (2–3), Vector databases (1–2) |

| SLO examples | p95 < 300ms; audit logs 365 days |

Cloud edge intelligence: hybrid wins (even if it’s annoying)

In Leading Digital Transformation in the AI Era, the pattern I keep seeing is clear: Cloud edge intelligence works best as a compromise. I train big models in the cloud, then push Real-time AI reasoning closer to where sensors, cameras, and robots create data. That’s Hybrid cloud intelligence in practice—and yes, debugging edge deployments is humbling.

Train in cloud, infer at the edge

Heavy training stays in the cloud for scale. But Edge AI computing handles mission-critical inference when latency and bandwidth matter. Multimodal inputs (video + audio + telemetry) make this even more important, because shipping everything to the cloud is slow and costly.

Use cases I’ve actually seen stick

- Retail shelf alerts (out-of-stock, planogram issues)

- Factory QA (vision checks on the line)

- Remote monitoring (healthcare and field assets)

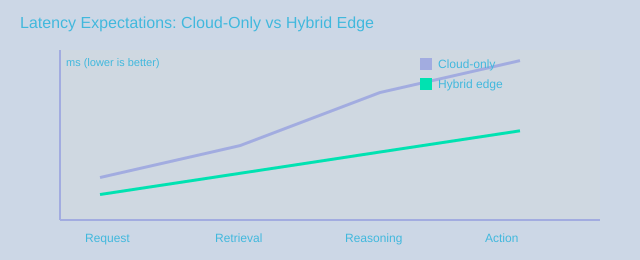

Latency as a leadership metric

I now treat latency like a KPI. Edge inference reduces latency versus cloud-only, which improves safety and user trust.

Werner Vogels: "Everything fails all the time; the art is building systems that recover gracefully—especially at the edge."

Security trade-offs

Data gravity pulls sensitive data local, but offline modes and patching reality add risk. AI infrastructure optimization means planning updates, keys, and rollback like product features.

Quick decision tree

- Need <500ms? Run inference at edge.

- Large retraining? Cloud.

- Intermittent network? Edge with offline fallback.

| Item | Guidance |

|---|---|

| Deployment split | Training=Cloud; Inference=Edge (hybrid) |

| Latency rationale | Edge inference reduces latency vs cloud-only |

| Remote monitoring | 49% see benefits from tech-enabled patient engagement |

Physical AI applications: when software grows hands and eyes

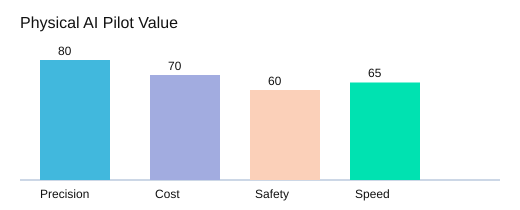

In 2026, Physical AI applications move AI from screens into machines, sensors, and devices. This shift is speeding up because it can raise precision and lower operational costs—while also expanding what teams can do. But Physical AI robotics feels different: when software controls motion, mistakes cost more than embarrassment.

Rodney Brooks: "Robots are hard—not because intelligence is mysterious, but because the world is messy."

Where I’d pilot first (3 domains)

- Warehouse picking: fewer mis-picks, safer lifts, steadier throughput.

- Predictive maintenance: detect wear early, reduce downtime, protect assets.

- Smart inspection: consistent checks for defects humans may miss.

Humans-in-the-loop is a feature

In my Enterprise AI strategies, I treat human review as a safety layer: clear handoffs, stop buttons, and escalation paths. Governance matters here—safety constraints, audit logs, and incident response drills should be designed before rollout.

Edge AI computing + Cloud edge intelligence

With Edge AI computing, inference happens near the sensor, improving latency and data quality. It also shifts responsibility: if the edge model filters data, I must document what it drops and why. A real constraint is power—inference_budget < 10W per device (illustrative)—so models must be small and tested.

A tiny sci-fi moment: the robot explains itself

Imagine an inspector robot rejects a part and I ask “why?” It should answer with sensor evidence, thresholds, and the last calibration—not “the model said so.” That’s how Cloud edge intelligence earns trust.

| Item | Data |

|---|---|

| Pilot domains count | 3 (warehouse, maintenance, inspection) |

| Expected outcomes | precision up, operational costs down, human capability up |

| Edge device constraint | inference budget < 10W per device (illustrative) |

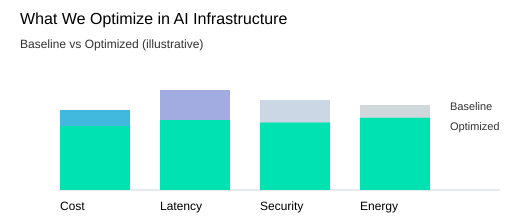

AI infrastructure optimization: the unsexy part that decides success

I used to start AI conversations with prompts. Now I start with GPUs, networking, and observability, because weak AI infrastructure systems turn “great models” into slow, costly demos. The skills market backs this up: AI role salaries are up 27% since 2019, so wasting expert time on broken pipelines is expensive.

AI superfactories: distributed efficiency beats “bigger data centers”

In 2026, AI infrastructure optimization is shifting toward distributed networks and interconnected AI superfactories to raise power density and lower unit costs. As Jensen Huang said:

"The next industrial revolution will be built on accelerated computing—and the factories will be AI factories."

Cost and governance: the hidden tax of experimentation

At enterprise scale, experiments create a hidden tax: idle GPUs, duplicate datasets, and unclear ownership. I push for security-audited releases and transparent data pipelines (open-source governance), plus a cadence: quarterly model risk review and continuous monitoring.

A practical scorecard I use

- Utilization: GPU/CPU time actually used

- Energy: watts per training/inference unit

- Latency: end-to-end response time

- Security posture: access, secrets, audit trails

Quantum computing AI (quick detour)

I’m watching Quantum computing AI mainly for Quantum-assisted optimizers in scheduling, routing, and portfolio-style resource allocation—not betting the farm yet.

| Signal | What I take from it |

|---|---|

| AI role salaries: +27% since 2019 | Infrastructure waste is now a direct talent-cost multiplier |

| Strategy shift | Distributed networks + AI superfactories vs bigger data centers |

| Governance cadence | Quarterly model risk review; continuous monitoring |

Healthcare AI applications (and the patient-facing AI solutions reality check)

In Vertical AI industries like healthcare, I’m seeing Healthcare AI applications move beyond Healthcare AI diagnostics into real workflows at scale: diagnostics, symptom triage, treatment planning, clinical decision support, and remote monitoring (5 areas). This shift is also pushing Enterprise AI adoption into the front door of care—where trust matters most.

Diagnostics are only the beginning

When AI helps a clinician read an image, the risk is contained. When it helps a patient decide what to do next, the risk is shared. That’s why Patient-facing AI solutions must be designed like safety systems, not chat features.

Trust is the product (and the liability)

Research shows 49% see benefits from tech-enabled patient engagement. I believe that upside is real—but only if we add governance: audit trails, bias checks, model updates, and clear human oversight.

A mini playbook I use

- Phase 1: internal CDS with logging and clinician review

- Phase 2: clinician-assisted patient tools (scripts, summaries, triage prompts)

- Phase 3: monitored autonomy with strict escalation rules

What I’d measure

- Engagement (completion, follow-through)

- Safety events (near-misses, escalations)

- Clinician time saved (minutes per case)

“Better is possible. It does not take genius. It takes diligence. It takes moral clarity. It takes ingenuity.” — Atul Gawande

The awkward truth: empathy doesn’t deploy via API. I treat AI as support, and humans as care.

| Metric | Value |

|---|---|

| Patient engagement benefit | 49% |

| Care workflow expansion areas count | 5 |

| Rollout phases (suggested) | Phase 1 internal CDS; Phase 2 clinician-assisted patient-facing; Phase 3 monitored autonomy |

Conclusion: my leadership cheat-sheet for AI trends 2026

I started this journey with pilot chaos: too many tools, unclear owners, and “wow” demos that never shipped. Looking at Digital transformation trends 2026, I’m ending with calmer choices—deliberate architecture, tighter governance, and outcomes I can measure.

My three bets for Top AI trends 2026

If I had to bet on three AI trends 2026, it’s multi-agent systems, AI-native platforms, and hybrid edge intelligence. Agentic workflows will reshape work, moving people into oversight and strategy. Multi-agent systems and AI-native architectures are becoming the core of Enterprise AI trends for 2026 planning, especially as IDC points toward 2030 agent orchestration at scale.

My 30/60/90 self-audit for Enterprise AI strategies

| Horizon | What I’d change |

|---|---|

| 30 days | Pick 2 use cases with clear KPIs; lock data access and security baselines. |

| 60 days | Stand up governance (model risk, audit trails); standardize an AI-native platform path. |

| 90 days | Deploy one agentic workflow with human-in-the-loop; train roles and run incident drills. |

Wild card: your “AI operating system” as a city

I picture the enterprise AI stack like a city: platforms are roads, data is utilities, and governance is zoning laws. I still don’t know the perfect zoning for every team—but I know we need it.

“The hardest part of transformation isn’t the technology—it’s the culture.” —Ginni Rometty

To keep the human part intact, I invest in skills, clear roles, and psychological safety. Transformation isn’t a program; it’s a practice.

TL;DR: If you’re leading digital transformation trends 2026-style, prioritize multi-agent systems enterprise use cases, invest in AI-native platform architecture (vector databases + RAG + orchestration), shift inference to cloud edge intelligence, modernize AI infrastructure optimization, and pilot vertical AI industries with tight governance and measurable outcomes.

Comments

Post a Comment