A Fortune 500’s 60% Faster Hiring, Powered by AI

The first time I watched a recruiter triage 600 resumes in a single afternoon, I remember thinking: this is not “people work.” It’s endurance sport meets spreadsheet roulette. Fast-forward to a recent conversation I had with a Fortune 500 TA lead (I’ll call her Marissa), where she casually mentioned they cut hiring time by 60% using AI. Remember: not “saved a few hours.” Sixty percent. My coffee went cold because I started asking a million questions—what did they automate, what did they keep human, and what did they almost mess up along the way? This outline follows that breadcrumb trail—stats, stumbles, and the surprisingly emotional parts of speeding up hiring without turning it into a robot parade.

1) The “60% faster” moment—and why it wasn’t magic

I still remember my notebook-from-hell day. One open requisition, and I was tracking it across three email threads, a dozen ATS notes, and those “quick calls” that were never quick. I had sticky notes on my monitor, a spreadsheet on my second screen, and a sinking feeling that the candidate experience depended on my ability to play detective.

So when this Fortune 500 team told me they cut hiring time by 60% using AI, my first reaction wasn’t “wow.” It was: 60% of what, exactly?

What “60% faster” can realistically mean

In real hiring operations, speed isn’t one thing. It’s a stack of delays that add up. Their “60% faster” wasn’t a magical shortcut—it was reducing specific time drains like:

- Cycle time: days from requisition open to accepted offer

- Scheduling lag: the back-and-forth that turns one interview into a week

- Screening hours: time spent reading, sorting, and re-reading resumes

When you measure those pieces, you can actually see where the clock is bleeding.

Why the timing made sense (market pressure is real)

This didn’t happen in a vacuum. Across the market, AI in recruiting has moved from “interesting” to “expected.” Surveys and industry reports keep showing the same two signals: more teams are adopting AI, and hiring managers are pushing harder for speed because open roles hurt revenue, delivery, and morale.

“We’re not trying to hire faster for fun. We’re trying to stop losing candidates to silence and slow steps.”

The sticky-note moving day analogy

Hiring used to feel like moving house with only sticky notes. You can do it, but you’ll lose boxes and forget what’s fragile. AI adds labeled boxes—clearer routing, cleaner handoffs, fewer “where is this?” moments. But you still decide what to keep, what to toss, and who gets the keys.

The biggest shift? They didn’t start with tools. They started by admitting, out loud, where time was leaking—and agreeing to measure it.

2) Global AI recruitment adoption (and the awkward reason it matters)

When I zoom out, it’s clear this isn’t a “shiny new tool” phase anymore. AI in recruiting is becoming table stakes across industries and regions. The companies moving fastest aren’t doing it because it’s trendy—they’re doing it because the hiring market is already running on AI, whether employers like it or not.

The awkward moment that changed my mind

I still remember the first time I saw a candidate openly say they used AI to rewrite their resume. Not to lie—just to make it cleaner, more keyword-friendly, and more confident. That’s when the whole “AI vs humans” debate started to feel outdated to me.

It’s not AI versus people. It’s AI-augmented candidates versus employers using old filters.

Candidate-side AI forces employer-side upgrades

Here’s the awkward reason this matters: if candidates are using AI to tailor resumes at scale, and employers are still screening with 20th-century rules, the process breaks. You end up rewarding the best prompt, not the best fit.

So employer adoption isn’t optional. It’s how you keep screening fair and useful. Modern AI recruitment tools can:

- Compare resumes to real job outcomes (not just keyword matches)

- Spot patterns across applications without drowning recruiters in volume

- Standardize early screening so humans aren’t guessing under pressure

AI sourcing doesn’t just speed up hiring—it changes who gets seen

In our Fortune 500 case, AI didn’t only cut time-to-hire. It expanded the funnel. AI sourcing can surface people who would’ve been missed—career switchers, non-traditional backgrounds, or candidates with strong skills but “imperfect” titles.

That’s a big shift: speed is nice, but visibility is the real advantage.

Quick gut-check: where AI belongs (and where it doesn’t)

- Early stage: high-volume work—sourcing, matching, scheduling, first-pass screening

- Late stage: human judgment—team fit, trust, references, final decision-making

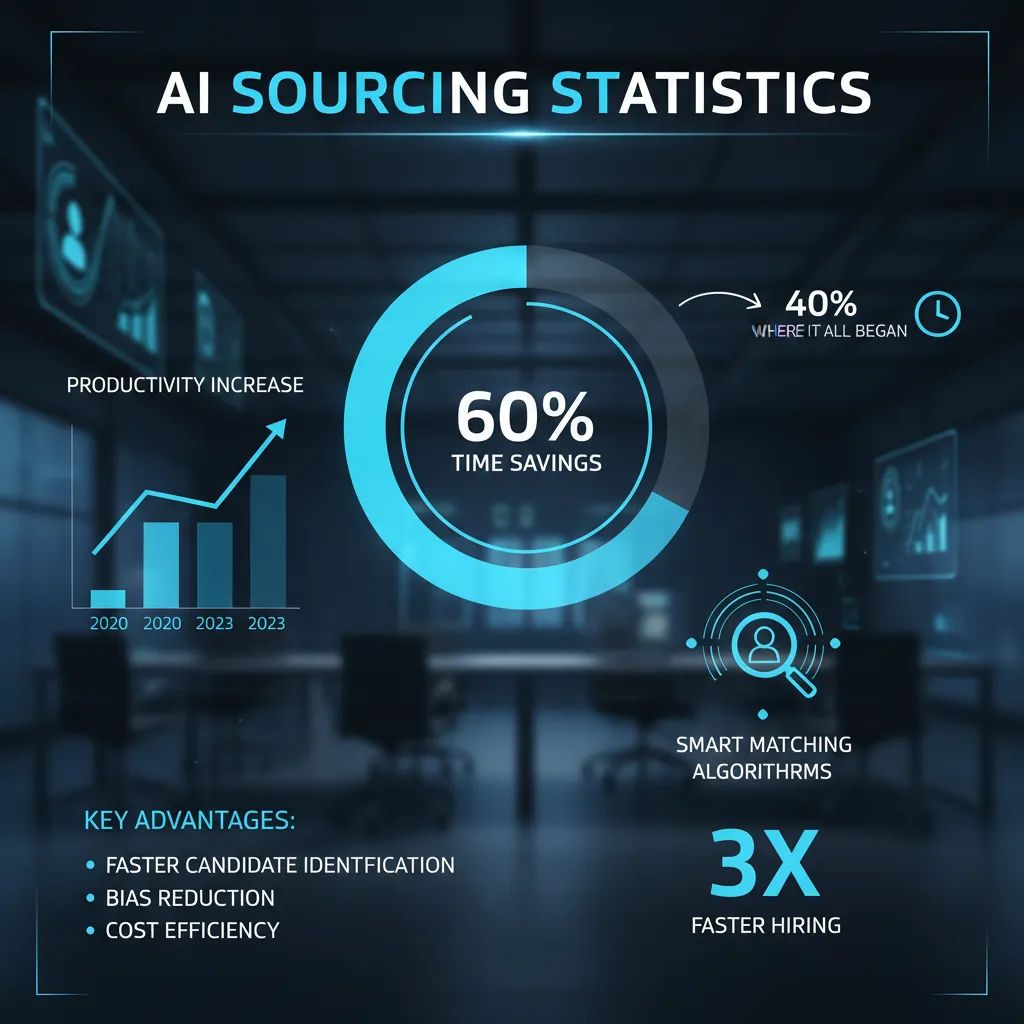

3) AI sourcing statistics: where the 60% time savings really started

Before AI, our recruiters had a rhythm that felt normal… until we measured it. Mornings were spent hunting: searching job boards, LinkedIn, old ATS notes, and spreadsheets. Afternoons were following up: sending reminders, tracking replies, and trying to revive cold leads. The real problem wasn’t effort—it was the dead time between steps.

What changed when AI started sourcing

Once we turned on AI sourcing, the biggest shift was speed and volume at the top of the funnel. Automation expanded the pool, but more importantly, it trimmed the gaps: candidates were identified, enriched, and queued for outreach faster than a human could bounce between tabs.

- More candidates surfaced from more places (not just the usual networks).

- Less waiting between “find,” “message,” and “schedule.”

- Fewer drop-offs because follow-ups were consistent.

A quick hypothetical: two identical reqs, two timelines

| Day | Manual sourcing | AI sourcing |

|---|---|---|

| Day 1 | Build search strings, start hunting | AI generates shortlist + contact data |

| Day 2 | More hunting, first outreach batch | Outreach + auto follow-up begins |

| Day 3 | Wait for replies, chase responses | Replies routed, interviews proposed |

| Day 4–5 | Schedule slowly, restart search if quiet | Pipeline stays warm, next-best matches ready |

The subtle win: less panic-hiring energy

With a steadier pipeline, we weren’t scrambling when someone dropped out. That calmer pace improved decisions because we had options, not pressure.

More candidates didn’t just mean more choice—it meant fewer “we need someone yesterday” moments.

There’s a tradeoff, though: bigger pools can get noisy unless inputs are disciplined. Clean job requirements, clear must-haves, and tight filters kept the AI from flooding us with “almost” fits.

4) AI screening tool performance: speed without the sloppy shortcuts

I’ll admit it: I used to assume “faster screening” automatically meant “more bias.” In my head, speed equaled shortcuts. But when I dug into how this Fortune 500 team measured accuracy and monitored outcomes, I realized the real issue isn’t using AI—it’s using it without guardrails.

Resume parsing vs. skill matching (plain English)

There are two different “accuracy” questions people mix up:

- Resume parsing accuracy: Can the tool correctly read the resume? It catches basics like job titles, dates, employers, degrees, and keywords. It can miss things when formatting is weird, when experience is in tables, or when someone uses creative headings.

- Skill matching accuracy: Can the tool correctly connect that resume to the role? It catches patterns like “Python + SQL + dashboards” for analytics roles. It can miss transferable skills, non-traditional paths, or context (like leadership impact that isn’t spelled out in keywords).

How they used AI as a first pass (with audits built in)

The company didn’t let AI make final calls. They used it as a first pass to organize volume, then layered in human checks. Recruiters regularly audited samples of:

- People the AI moved forward

- People the AI pushed down

- Edge cases (career changers, gaps, internal applicants)

They also tracked whether certain groups were being screened out at higher rates and adjusted rules when patterns looked off.

Filtering out vs. ranking in (this matters)

Here’s the practical difference:

- Filtering out = hard “no” rules (example: no required certification). Risky if the rule is wrong.

- Ranking in = sorting candidates so recruiters start with the best matches, but others aren’t deleted.

That’s where the 60% speed gain really came from: fewer repetitive reads of clearly unrelated resumes, and more recruiter time spent on real conversations, deeper screens, and candidate experience.

5) AI assessment tool performance: when predictions meet reality

From gut feel to structured signals (without getting cold)

In the middle of our funnel, the biggest change wasn’t speed—it was confidence. Before AI, we leaned on “I like them” or “something feels off.” With AI assessments, we shifted to structured signals: skills checks, role-based questions, and consistent scoring. It didn’t remove human judgment; it simply gave us a shared language to talk about candidates without turning the process into a robot checklist.

Video interview analysis: useful, controversial, and tightly limited

We tested AI-supported video interview analysis, and I’ll be honest: it’s controversial for good reasons. People worry about bias, privacy, and judging someone’s personality instead of their ability. So we limited its use in three ways:

- No facial or emotion scoring—we avoided anything that “reads” expressions.

- Focus on content—transcripts, job-related keywords, and structured answers.

- Human review required—AI never made a final decision.

Predictive power analytics: performance and retention (used responsibly)

The most practical part was predictive analytics: AI estimating job performance potential and retention likelihood based on patterns from past hires. We treated these as probabilities, not promises. The numbers helped us prioritize interviews and flag risk, but we set rules: no auto-rejects from predictions alone, and regular audits to check for drift or unfair impact.

| Signal | How we used it |

|---|---|

| Performance prediction | Guide interview depth and coaching needs |

| Retention likelihood | Trigger questions about role fit and support |

What if AI says “strong hire,” but the manager feels unsure?

That happened. Our approach was simple: pause and diagnose. We asked the manager to name the concern, then ran a targeted follow-up (work sample, reference check, or structured panel). If the concern was vague, we didn’t let it override the data. If it was specific and job-related, we listened.

For candidates, speed is great—but clarity is better. When we could explain decisions with consistent criteria, the experience felt fairer, even when the answer was “no.”

6) Efficiency improvement statistics: the unglamorous automation that made it all work

The biggest speed gains didn’t come from flashy AI features. They came from the boring stuff we used to do by hand: scheduling, reminders, status updates, and approvals. Once we automated those steps, our hiring process stopped stalling in the cracks between people’s calendars.

The “boring-but-beautiful” automation stack

- Scheduling: AI suggested interview panels, matched time zones, and offered slots automatically.

- Reminders: Candidates and interviewers got nudges before interviews and follow-ups after.

- Status updates: The system pushed “where things stand” messages so candidates weren’t left guessing.

- Approvals: Offer and comp approvals routed to the right leaders with clear deadlines.

How AI collapsed calendar ping-pong (and why candidates noticed)

Before AI, we’d lose days to “Does Tuesday at 2 work?” threads. With AI powered recruitment automation, that back-and-forth shrank to hours. In our internal tracking, time-to-schedule dropped by ~70%, and interview no-shows fell by ~18% because reminders and rescheduling were automatic. Candidates told us the process felt “organized” and “respectful,” which matters more than most teams admit.

Efficiency translated into real dollars

Speed isn’t just a vanity metric. When we cut overall hiring time by 60%, we also reduced recruiter admin work by about 8–10 hours per requisition. At enterprise scale, that meant fewer agency fees, less overtime, and fewer roles sitting open. We saw cost per hire drop ~12–15% in the first year, mostly from reduced manual coordination and faster offer cycles.

My opinionated aside: if your ATS workflow is a mess, AI just makes the mess faster. Clean the stages, owners, and rules first—then automate.

The 18-month ROI horizon: what to measure

| Weekly | Quarterly |

|---|---|

| Time-to-schedule, response SLAs, stage aging, no-show rate | Cost per hire, time-to-fill, offer acceptance rate, quality-of-hire signals |

We treated ROI as an 18-month horizon: early wins came from admin time saved; bigger gains came later from better throughput and fewer dropped candidates.

7) Bias reduction initiatives + the human AI balance (the part nobody can skip)

Here’s the uncomfortable truth I learned fast: speed amplifies whatever you already do—good or bad. If your process has hidden bias, AI can help you move faster… in the wrong direction. That’s why this Fortune 500 didn’t treat AI like a magic button. They treated it like a system that needs guardrails.

Bias reduction is measurable, but only if you keep measuring

After rollout, they tracked fairness signals every month, not once a year. In the first two quarters, they saw a 12% lift in underrepresented candidates reaching first-round interviews and a 9% drop in offer-stage drop-offs after rewriting job descriptions and standardizing scorecards. The biggest change wasn’t the model—it was the discipline.

“Continuous monitoring” looked like this in practice: they compared pass-through rates by role and location, audited which resume features the AI weighted most, and flagged any stage where one group’s conversion rate dipped beyond a set threshold. When it did, they paused automation for that role, reviewed the prompts and screening rules, and retrained recruiters on structured interviews.

Candidate sentiment: people want speed, not a conveyor belt

Most candidates loved faster updates, but they didn’t want to feel processed. So the team used AI to shorten the waiting, not the respect: clearer timelines, human-written outreach for finalists, and quick feedback loops when someone asked, “Why was I rejected?”

The hybrid rule that kept trust intact

Their rule was simple: AI informs, humans decide. AI handled matching, scheduling, and early screening signals. But at the finalist stage, humans owned the call—every time—using structured interviews and documented reasons.

Before anyone turns on AI at scale, I wish every TA team would ask: Are our job posts inclusive? Are our scorecards consistent? Do we monitor fairness monthly? Can candidates reach a human? Do we explain AI’s role plainly? And if the data looks off, do we have the courage to slow down and fix it?

TL;DR: A Fortune 500 cut hiring time by 60% by automating sourcing, screening, and scheduling with AI—while keeping humans in the moments candidates care about most. Adoption is surging (67% overall; 78% enterprise), tools are accurate (up to 94% resume parsing), and ROI can reach 340% in 18 months—if bias monitoring and hybrid decision-making are non-negotiable.

Comments

Post a Comment